4.1. Optimization Complexity

Computational Complexity

Memory Complexity

Cognitive Complexity

Cyclomatic Complexity

Big O notation [1]

https://dev.to/alvbarros/learn-big-o-notation-once-and-for-all-hm9

4.1.1. Computational Complexity

4.1.2. Memory Complexity

4.1.3. Cognitive Complexity

Measure of how difficult the application is to understand

Measure of how hard the control flow of a function is to understand

Functions with high Cognitive Complexity will be difficult to maintain.

4.1.4. Cyclomatic Complexity

Measures the minimum number of test cases required for full test coverage.

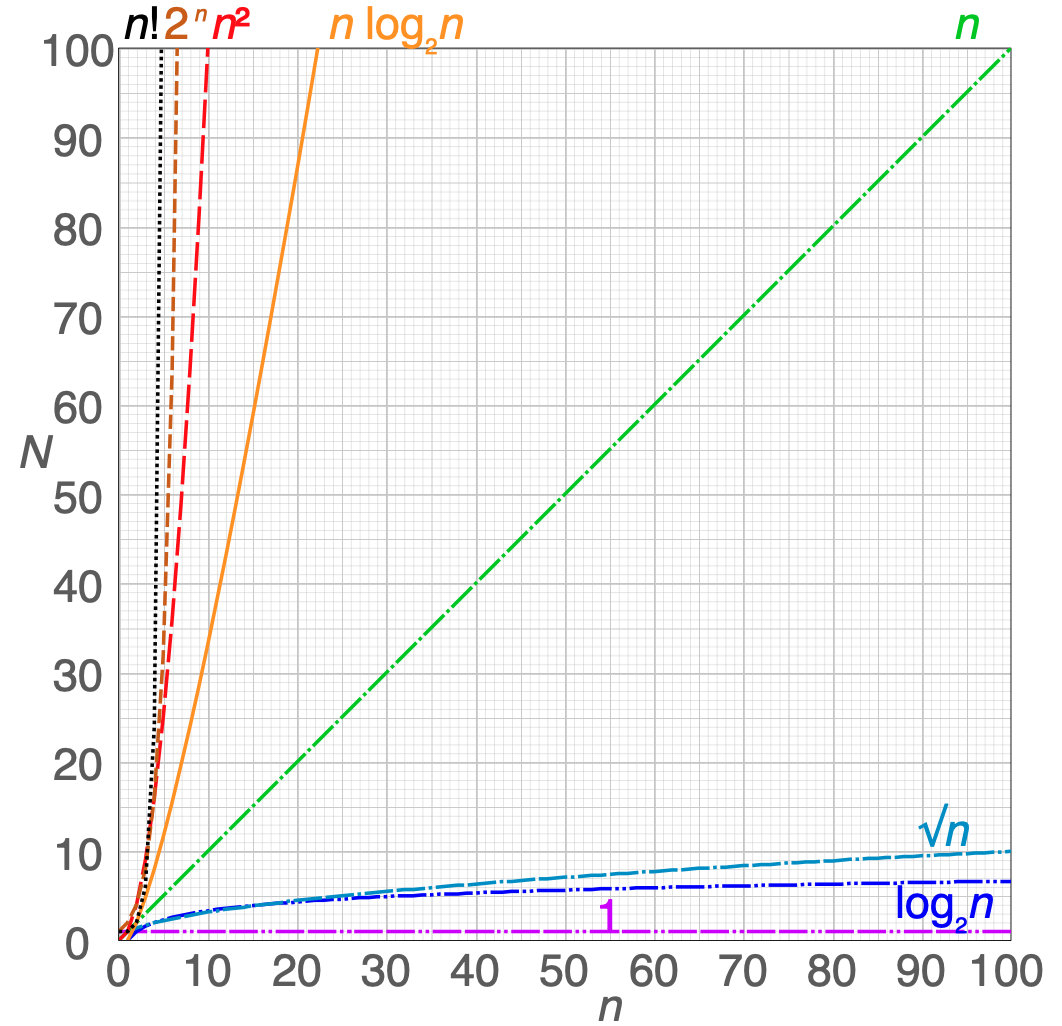

4.1.5. Big O notation

O(sqrt_n)O(log_n)O(log2_n)O(1)O(n)O(nlog2_n)O(n^2)O(2^n)O(n!)

Running time (T(n)) |

Name |

Example algorithms |

|---|---|---|

|

constant time |

Finding the median value in a sorted array of numbersCalculating (−1)n |

|

inverse Ackermann time |

Amortized time per operation using a disjoint set |

|

iterated logarithmic time |

Distributed coloring of cycles |

|

log-logarithmic |

Amortized time per operation using a bounded priority queue |

|

logarithmic time |

Binary search |

|

polylogarithmic time |

|

|

fractional power |

Searching in a kd-tree |

|

linear time |

Finding the smallest or largest item in an unsorted array, Kadane's algorithm, linear search |

|

n log-star n time |

Seidel's polygon triangulation algorithm |

|

linearithmic time |

Fastest possible comparison sort; Fast Fourier transform |

|

quasilinear time |

|

|

quadratic time |

Bubble sort; Insertion sort; Direct convolution |

|

cubic time |

Naive multiplication of two n×n matrices. Calculating partial correlation |

|

polynomial time |

Karmarkar's algorithm for linear programming; AKS primality test |

|

quasi-polynomial time |

Best-known O(log2 n)-approximation algorithm for the directed Steiner tree problem |

|

sub-exponential time |

Best-known algorithm for integer factorization; formerly-best algorithm for graph isomorphism |

|

sub-exponential time |

Best-known algorithm for integer factorization; formerly-best algorithm for graph isomorphism |

|

exponential time (with linear exponent) |

Solving the traveling salesman problem using dynamic programming |

|

exponential time |

Solving matrix chain multiplication via brute-force search |

|

factorial time |

Solving the traveling salesman problem via brute-force search |

|

double exponential time |

Deciding the truth of a given statement in Presburger arithmetic |